Here’s a quick explainer on quantum computing, quantum mechanics, and Google’s new Willow chip. I have a PhD in theoretical chemistry from UC – Berkeley where I did research on quantum computation under professor K. Birgitta Whaley.

On Dec. 9, Google announced the release of Willow, its newest state-of-the-art quantum computing chip. On their blog, the company boasted that the new processor “performed a standard benchmark computation in under five minutes that would take one of today’s fastest supercomputers 10 septillion (that is, 10^25) years.” This claim would have been sensational enough, but they also declared that this advance “lends credence to the notion that quantum computation occurs in many parallel universes, in line with the idea that we live in a multiverse, a prediction first made by David Deutsch.” This sentence captured the imagination of popular science magazines and news aggregators worldview. A quick internet search (on Google, naturally) turned up headlines like “Google’s Quantum Chip Sparks Debate on Multiverse Theory” “I Types This Column From a Parallel Universe” and “Did Google Just Prove That the Multiverse Is Real?”

So what exactly did Google do? And do we need to start preparing for the appearance of Iron Man and Thanos variants duking it out in Times Square?

How does a computer work?

Almost everyone in the U.S. owns a computer, whether it sits on their desktop, or runs their car’s electrical system, or lets them play Candy Crush while they wait in line at Harris Teeter. Today’s computers can be traced all the way back to the 27-ton room-sized ENIAC behemoth installed at UPenn in 1945. Yet despite their differences, the earliest computers and today’s laptop run on the same basic principles: information is stored as a series of “bits” (i.e. a series of 0s and 1s) in the computer’s memory. Electronic components then manipulate this information to perform simple operations like addition and subtraction, multiplication and division. If you concatenate millions and billions of these logic gates, you end up with a machine that can perform a huge variety of complex tasks much faster than a human being, everything from searching through the entire text of the Encyclopedia Britannica to forecasting the weather.

While the theory of how computers work is purely mathematical, every computer is also a physical object. In the early 20th century, scientists wrestled with how they should store the “0s” and “1s” crucial to a computer’s operation. Should they use mechanical switches? Light bulbs? Vacuum tubes? In the end, the discovery of the transistor put an end to the debate. Companies like IBM and Intel were able to create tiny chips containing billions of electronic switches made out of silicon and the modern computer came to be. And these computer chips really did function like collections of tiny mechanical switches that obeyed the laws of classical (or Newtonian) mechanics. Each switch was either “on” or “off,” just like the light switch in your living room.

But even before the advent of computers, early 20th century physicists had realized that classical mechanics, the physics of everyday objects, was incorrect. Objects actually obeyed a different, and extremely bizarre, set of laws described by a branch of physics that came to be known as “quantum mechanics.” Quantum mechanics and classical mechanics look the same when objects are large. But when objects are sufficiently small, they clearly obey quantum mechanical rather than classical mechanical laws. So what would happen if we built computers from switches so small that made use of quantum mechanics?

What is a quantum computer?

In the 1980s, a few physicists floated the idea that microscopic quantum systems could be used to perform computation. Then, in 1985, physicist David Deutsch discovered that a hypothetical quantum computer could solve some problems exponentially faster than a normal, classical computer. But interest in quantum computation exploded in 1994 when David Shor discovered that quantum computers could efficiently factor large numbers. Suddenly, everyone’s ears perked up. Why? Because the difficulty of factoring large prime numbers is the basis of modern cryptography. If you’ve ever seen the 1992 Robert Redford movie Sneakers, you understand the implications. A functional quantum computer would be able to hack nearly any cryptographic system in use today, whether it’s protecting your phone’s password or Amazon’s inventory or Bill Gates’ Bitcoin wallet.

Because we are still in the early days of quantum computing, many issues are unsettled. The biggest question is what a quantum computer should be made of. In the same way that the earliest calculators (like the abacus) were first made out of mechanical parts and later out of vacuum tubes and only later out of silicon transistors, the designs of quantum computers are still evolving. Scientists have created very small quantum computers made out of molecules in an NMR machine, atoms in an optical lattice, electron spins in silicon, and superconducting devices. But no one is certain which architecture is optimal such that it will eventually yield a large, scalable, commercial device.

How do quantum computers work?

Quantum computers are also composed of tiny switches that represent 0s and 1s. But quantum bits, known as “qubits”, make use of two strange but crucial quantum mechanical properties.

First, qubits don’t have to either be in the state 0 or 1. Instead, they can be in what is called a “superposition” state, an arbitrary combination of 0 and 1 at the same time. If we think of a classical bit as a binary on/off switch, we can think of a qubit as a dimmer switch than can exist in intermediate states between on and off.

Second, qubits can experience a phenomenon known as entanglement, which occurs when the state of one qubit is correlated with the state of another qubit, even when the two qubits are very far apart. Again, if we think of qubits as switches, imagine two switches that are coupled such that they are always either both on or both off. What makes entanglement spooky (as Einstein described it) is that it can persist at extremely large distances in the absence of any physical connection between the two objects.

These two properties of qubits have significant consequences. In particular, they allow quantum computers to perform specific types of calculations like factoring much more quickly than classical computers. Crucially, the difference is not merely processor speed, but the physical nature of the components. A quantum computer is not a faster version of a classical computer. It is an entirely different kind of computer. As a rough analogy, consider the difference between a coal power plant and a nuclear power plant. Over the course of history, coal power plants have become bigger and more efficient. But they are still burning coal. A nuclear power plant generates power in an entirely different way.

What did Google do?

The Google “Willow” chip is the latest in a line of chips developed by Google’s Quantum AI project. It is somewhat difficult to find technical details online (possibly for proprietary reasons!) but Google’s quantum computer is based on superconducting qubits. Willow makes several substantial advances over older chips and over other quantum computer prototypes.

First, Willow consists of 105 interacting qubits arranged on a grid. While this number sounds embarrassingly small (modern desktop computers contain around 1 terabyte, or 8 trillion bits, of memory!) it is quite large for a quantum computer. And keep in mind that, because of the exponentially greater power of quantum computers, a handful of qubits goes a long way.

Second, using this small number of qubits, Willow was able to perform a benchmark computational task known as random circuit sampling (RSC) much, much faster than any classical computer. In fact, in a few minutes, they did a computation that would have been literally impossible on a classical supercomputer, taking significantly longer than the lifetime of the universe.

Third, they were able to successfully implement error correction. As the number of qubits in a quantum computer grows, errors will accumulate, placing an effective limit on how big a quantum computer can be. Willow’s error correction was able to stabilize qubits such that, in theory, more could be added without the computation breaking down.

These three points make the Willow chip a major milestone in the development of a quantum computer.

What does quantum mechanics mean philosophically?

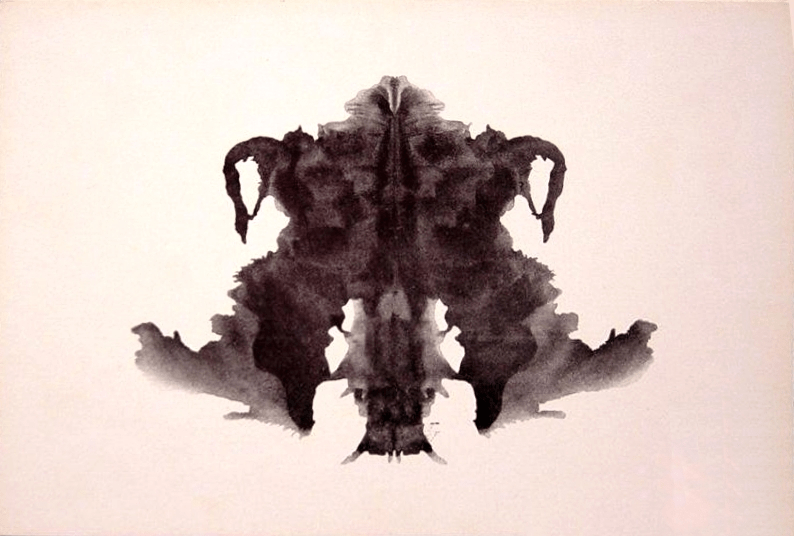

There are three major interpretations of quantum mechanics: neorealism, Copenhagen, and many worlds. All three interpretations are metaphysical rather than empirical in nature; they are all consistent with all experimental observations and with the success of quantum computation. In other words, we don’t currently know of any experiments that have been done or could be done which would prove or disprove any of these interpretations.

Think of these interpretations in terms of an ink blot test. All three observers agree on every detail of the ink blot’s actual shape. But one observer thinks the ink blot looks like a moth, another thinks it looks like a frog, and the third thinks it looks like his parents fighting. No matter how carefully you study the ink blot, you won’t be able to prove or disprove any one of these interpretations.

All three interpretive schools of quantum mechanics are trying to explain a phenomenon known as wavefunction collapse. Briefly, quantum objects appear to undergo two kinds of dynamics: “unitary” and “non-unitary.” Unitary dynamics often involve spooky quantum properties like tunneling and teleportation and happen when the quantum object isn’t being measured. Non-unitary dynamics look more like classical mechanics and happen when the object is being measured. So, somehow, measuring the object makes it collapse from a quantum state onto a classical state.

Neorealism hews closely to a classical view of reality. It holds that quantum objects really do have properties independent of measurement, just like classical objects. The behavior of quantum objects merely looks quantum mechanical because they are being steered by invisible, undetectable fields that travel faster than the speed of light (a big “no no” in physics).

The Copenhagen interpretation, which is considered the “orthodox” interpretation, maintains that quantum objects don’t actually have classical properties until they are measured. When measurement occurs, a quantum system collapses onto a single classical state. But what constitutes “measurement”? While some defenders of the Copenhagen interpretation believe that (human?) consciousness is what produces wavefunction collapse, others take a pragmatic “shut up and calculate” approach that simply ignores what they view as a purely philosophical question.

Finally, the many worlds interpretation of quantum mechanics was developed in 1957 by Hugh Everett. According to the many worlds interpretation, when a quantum object is measured, its wavefunction doesn’t collapse. Rather, the universe splits into multiple branches. For example, if a quantum coin in placed into a superposition state of heads plus tails and is then measured, the universe splits in two. In one branch of the multiverse, the result of the measurement is heads. In the other branch, the result is tails.

As strange as it sounds, these interpretations are all taken seriously by physicists. Moreover, anyone looking for a “normal,” “common-sense,” “non-insane” interpretation of quantum mechanics will be severely disappointed. None exists. Quantum mechanics is unavoidably weird.

What about the multiverse?

Finally, we reach those sensationalistic headlines: Did Willow prove that the multiverse exists?

In a word, no.

The connection between quantum computing and the multiverse arose thanks to the many worlds interpretation of quantum mechanics. David Deutsch, one of the earliest researchers into quantum computation, believes in the many worlds interpretation. He argues that quantum computing is so much more powerful than classical computing because quantum processors are effectively tapping into the computing power of their counterparts in other branches of the multiverse.

However, his is not the only explanation for the power for quantum computing. And since all interpretations of quantum mechanics predict the efficacy of quantum computing, its success can hardly count as evidence for the multiverse. In the same way, if three men stand accused of a crime and I discover that the crime was committed by a man, I have not made any progress towards identifying which of the three was actually the criminal!

In summary, Google’s progress is significant and physicists are excited about quantum computation for wholly legitimate reasons. But headlines have been overblown and sensationalistic. A working, practically-relevant quantum computer would be groundbreaking, but it is probably still decades away. In the meantime, there’s no need to be on the lookout for intergalactic wormholes or visits from the TVA.

Related articles: